The public release of OpenAI’s artificial intelligence (AI) chatbot Chat-GPT has recently brought AI to the forefront of the public imagination. Alongside mass fascination with its capabilities and potential uses, its rollout has been accompanied by ardent discussions around the legibility, trustworthiness, accountability, and even agency of AI programmes. For specialists, these issues are far from new, and the design-inflected question of AI explainability has been a pressing concern for programmers and user-interface experts for some time [1].

These recent debates have seen a resurfacing of the language of ‘black boxes’ in a broad public forum. In this context, the phrase is often used critically to conceptualise an understanding gap between a system and its users. It refers to an unknowable space that emerges when a system cannot easily ‘show its working’ to either its users or designers. For many, an accusation of a platform either being or incorporating a black box relates to the impossibility of full control or oversight over it. This typically arises from a lack of comprehension of the inner workings of that system. Prompts go into a black box style algorithm, and information comes out, but the connection between the two cannot be fully understood, even by its programmers [2].

In other words, the computational metaphor of a black box is not associated with colour or form, but with the notion that a system’s output can not necessarily be deciphered by analysing its inputs. It operates as an unknowable function in the passage of information. The sense of it performing like a ‘box’ has little to do with storage, but rather relates to an intractable containment of hidden knowledge that creates ethically-significant problems of causality (cause and effect) and accountability. For similar, largely symbolic, reasons, the terminology of black boxes finds another well-known (mis)use in the field of aviation. Again, the persistent metaphor is associated with the containment of something, in this case, the rarefied information about events that transpired in the final minutes of an ill-fated aircraft. In both, a black box metaphor appears at a moment of uncertainty between causes and effects.

The design of theatre auditoriums can help to conceptualise some of the consequences of living with black boxes at a human scale and in a spatial sense. In his influential book Suspensions of Perception: Attention, Spectacle and Modern Culture, cultural theorist Jonathan Crary points to the adaptations that Richard Wagner made to the design of the Festspielhaus in Bayreuth as a turning point in the dramatist’s ability to dominate audience attention [3]. This purpose-built festival hall, opened in 1876, saw Wagner make now-famous infrastructural interventions that would, he hoped, encourage his audiences to engage with the fictional worlds presented onstage in a more absorbed, even hypnotic way. Removing the sideways facing booths from the seating, visually shielding his orchestra from the audience and dimming the lights in the auditorium are perhaps the best cited examples of the type of adaptations he demanded.

Crary, however, emphasises the significance of a less well-known innovation, an optical effect that would go on to be known as Wagner’s ‘mystic abyss’, in achieving a desired totalising engrossment of his audience in the presented scene [4]. This effect – the ‘mystic abyss’ – refers to the intentional insertion of unknowable distance between the stage space and auditorium achieved by separating the two with a series of receding, perspective-distorting proscenium arches. This intervention disrupted all continuous sight lines between stage space and the auditorium, thus perceptively and epistemologically severing the visual bonds between real space and fiction. In so doing, the mystic abyss demanded that audience members undergo a more fully-realised abandonment within the scene presented. They were encouraged to ‘pick a side’ between fiction and reality in a perceptive sense.

Contemporary black box theatres, arguably and ironically, represent a move away from these hallucinatory priorities. While on the one hand, some elements carry an inheritance from Wagner and early modern scenographers (their blank flexibility, typically low house-lighting and matt-black surfaces that visually privilege the fictional space on stage) on the other hand, their frequent ‘in the round’ layout means that their audiences tend to be more self-aware and often have the impression of sharing the event space with the performers. Again, the metaphorical name black box does not refer to their colour or shape, but rather to a more generalised aesthetic of containment of a space of fiction in a self-consistent interiority (box), supported by a humility of the playing space that bends to meet the various fictions that inhabit it (black). Unlike Wagner’s passive, hypnotised audiences, stripped of autonomy – if we are to follow Crary on this – these groups inhabit the same forum as the performers [5]. In this case, the ‘suspension of disbelief’ tends to be requested rather than insisted upon as the border of the theatrical universe is situated close to the entrance to the auditorium rather than between proscenium arches.

In a theatrical black box – unlike an AI-powered chatbot or a flight responder – the human element is ‘on the inside’, sharing a space and collaborating somewhat in the event that is live theatre. It might be hard to convey the full essence of what happens within a temporary theatrical universe to someone who never saw the show, but each event is always a joint venture.

The question of explainability in AI is not a settled issue in computer science, with some developers believing that too much potential is lost in the process of making an algorithm fully explainable to humans. In the context of these decisions being made away from the public forum, it is important for the rest of us to consider what costs must be paid in terms of accountability and autonomy in exchange for the enchantment and wonder earned across a mystic abyss.

Future Reference is supported by the Arts Council through the Architecture Project Award Round 2 2022.

McCormack’s was established in 1984, its current owner is Daire O’Flaherty. At only 18m2, this shop had a powerful presence. It poured out onto the footpath with negotiations of punctures, pedals, pumps, prices, all conducted in the plein air of Dublin's Appian way: Dorset Street. Pronounced in dublin-ese with two distinctly independent syllables: Dor-set, the common pronunciation is miles from the English variant, and lightyears from the ancient route it is descended from: Slige Midluachra [1].

Unlike the case of the ill-fated Delaney’s bike shop in Harold’s Cross, allegedly Dublin’s oldest [2], it was not the increasing cost of business that shut McCormack’s. Right up to its closure, this institution was hopping, with a lively mix of locals and commuters dropping by. According to the shop owner, it was closed because the landowner valued the site more highly once it was vacant [3].

The bike shop was part of a three-storey suite of early Victorian buildings, with a modified-Georgian terrace lining the west of it. Over the past few years its neighbouring buildings have similarly been drained of residential and retail tenants. Relatively recently this city block was home to a multitude of residents and traders. Today the signature calling card of vacancy is visible: permanently-opened windows in the upstairs accommodation, allowing the elements in. Nobody lives or works here to shut them, to provide essential daily care for the properties.

Daire is well-versed and articulate in what makes a city both at the ground level and also the urban theory behind it. On Dorset street, he had a frontline view of Dublin’s traffic congestion during the morning rush hour, the worst it has ever been. He sees an opportunity in the widespread overhaul of the city’s transport system, which is being redesigned to prioritise public transport for decarbonisation and public health: more and more people are choosing bikes to get into work, and live healthily in the city. In his view bikes are a key component of any liveable city, like Paris or Amsterdam perhaps. What good will the ambition to encourage cycling be, without centrally-located facilities for bike repair and maintenance? It is akin to building a motorway without including for new garages or fuel stations.

McCormack’s now has a premises in Drumcondra, not far from its former home. Daire has yet to establish whether business has actually improved. However the same lack of protection exists in the new premises. While there are limitations to hosting a bike shop in a small retail unit (cycling shops are ideally suited to larger premises), McCormack’s 41 years of business clearly evidences the ability to adapt and survive in small, tight spaces. Shutting small independent retailers down will make larger out of town suburban shops more tempting to customers. It also offloads a responsibility to repair the existing urban fabric – an essential and under-practised aspect of the circular economy.

At a municipal level, there are no obvious consequences for ousting an established small business tenant in pursuit of greater profit, nor any meaningful incentives for landlords to help their continued operation. The desire to sell off buildings with a clean slate of no sitting tenants is widespread in Dublin, and the results are most keenly felt by the communities who make use of small businesses.

The closure of specialised small businesses, like bike shops, locksmiths, butchers, grocers, are part of a broader list of fatalities to our city, with the loss of art and cultural spaces, pubs and restaurants regularly causing public outcry. In an ecosystem of property speculation, few tenancies are safe. The liveable city we aspire to is increasingly precarious.

While these changes can seem inevitable and often happen stealthily over time, the failure of policy to protect small independent businesses will cost the city in easily-measurable ways. Daire famously once broke up a fight between two locals arguing over money; he, and many others like him, are eyes, ears and a friend to the street. This is increasingly relevant when the media and political debate focus on inner-city crime and public safety.

The fight for the city is not just in art spaces, pubs, heritage properties, it is the fight to protect small independent retailers and those committed to living and doing business in our city. If Dublin is to stay open for business, it has to protect them too. If indeed ‘we are Dublin Town’ let us aim to be like Paris or Berlin, with a feast of small independent retailers providing vibrancy to streets [4]. Task forces looking at the bigger picture of our urban centre, encouraging external investment in the city, need to be clear-eyed on the draining of its smaller, but equally essential, tenants. Otherwise fixing the city through grand gestures will be like trying to save a marriage, while having an affair.

After forty-one years in business, what was probably Dublin’s smallest bike shop: McCormack’s on Dorset Street, pulled down the shutters for the last time. In this article, Róisín Murphy uses the closure as a lens on the wider disappearance of small, long-standing businesses from the city, asking how liveable Dublin can remain if independent traders and venues continue to vanish.

Read

This year’s presidential election made visible a dynamic that is often overlooked in political analysis: how campaigns operate as a form of civic infrastructure, and to what extent design plays a role in their efficacy. Far from being peripheral or decorative, the visual strategies deployed by candidates’ structure how people encounter political life; they shape perceptions long before policy is discussed or manifestos are read. Political design occupies a unique position within democracies, somewhere at the intersection of communication, civic identity, and public trust.

In Ireland, this relationship between design and democratic expression has been strained by a decades-long pattern of executive neglect. Successive governments have systematically deprioritised design and aesthetic quality in public communication and built infrastructure. Senior ministers increasingly frame design as an optional consideration, an unnecessary add-on rather than a fundamental part of how the State articulates care, competence, and regard for its people. As Minister for Public Expenditure Jack Chambers stated during a debate concerning escalating costs at the National Children’s Hospital (NCH), ‘there needs to be much better discipline in cost effectiveness… That means making choices around cost and efficiency over design standards and aesthetics in some instances’ [1].

This position, widely cited and contested, exemplifies a broader ideological shift which sees design treated as a dispensable luxury rather than an essential civic tool [2].This framing misunderstands the function of design within public life. Design, in this case, is not ornamental; it is a mode of communication through which the State makes itself legible. When design is neglected, the consequences extend far beyond the aesthetic and shape the conditions under which political meaning, public trust, and civic visibility are formed.

In the aftermath of Catherine Connolly's election as President, commentators highlighted the design and visual expression of each candidate as decisive factors [3]. Connolly’s campaign offered me a rare opportunity to explore what an authentically Irish political visual identity might look like when grounded in cultural memory rather than branding for the sake of visuals alone. While designing, I drew directly from Ireland’s vernacular signwriting tradition: the hand-painted shopfronts, gilded fascias, and serifed letterforms that once defined the visual texture of towns and villages. These were not simply aesthetic references. They embodied authorship, locality, and a sense of civic care.

By incorporating hand-drawn lettering, a deep green and cream palette, and a postage-stamp motif, the campaign sought to restore the tactile warmth and humanity often lost in contemporary political design. The stamp, a quiet symbol of communication and exchange, is a reminder that politics is, at its core, a conversation carried between people. This concept frames Irish craft traditions not as relics, but as living cultural practices capable of shaping contemporary civic discourse.

In doing so, Connolly’s campaign made design itself an act of cultural continuity, a way of honouring the past while proposing a more grounded and participatory future. By the time Connolly declared on election night, “This win is not for me, but for us,” the sentiment had already been woven through posters, leaflets, and social media, a visual testament to a campaign that made the collective visible long before the votes were counted [4].

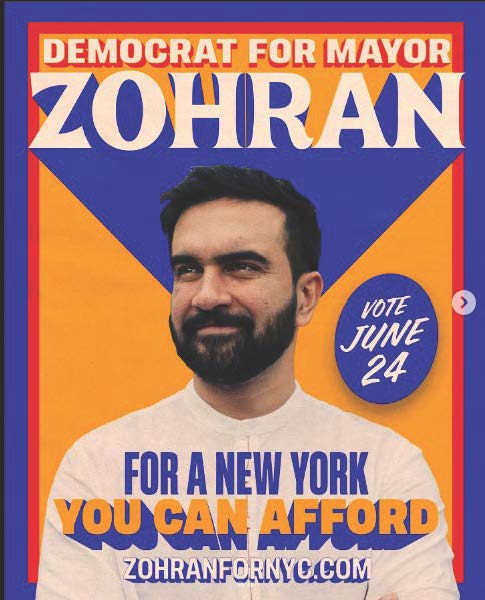

Across the Atlantic, Zohran Mamdani’s mayoral campaign in New York City attracted attention first for his democratic socialist views. It was the striking coherence of his campaign design, however, that propelled him into broader public discourse. Not since Shepard Fairey’s Hope poster, for Barack Obama, had a political image circulated so widely. It gained the kind of immediate recognition associated with Jim Fitzpatrick’s image of Che Guevara.

The Mamdani campaign was intentionally rooted in the material and cultural vernacular of the city itself. The cobalt blue and yellow palette was drawn directly from everyday sights in New York: bodega awnings, taxi cabs, MetroCards, hot dog vendors, and the signage of small independent businesses [5]. In this way, the campaign aligned itself with working-class infrastructure that defines the city’s public life, situating Mamdani not as an outsider but as a candidate embedded in the city’s social, cultural and economic rhythms [6]. Central to this strategy was the premise that design could serve as a communicative bridge to the constituency Mamdani sought to represent. In doing so, the campaign framed visual culture as a mode of continuity and care, a reminder that political communication can affirm belonging as powerfully as it persuades.

Irish election materials, as well as the State's political design more generally, don't attempt to convey substantive meaning through visuals. Their long-standing reliance on formulaic portraiture, generic slogans, and minimal graphic refinement mirrors a broader campaign strategy in which candidates are packaged as approachable local figures using highly-conventionalised visual cues. This approach reduces design to a mechanism for name recall rather than a vehicle for articulating political values or fostering civic engagement. The environmental waste associated with poster production only heightens the sense of outdatedness and underscores how Irish campaign materials often lag behind the more considered, narrative-driven strategies emerging elsewhere. As such, this tradition of visual identity crystallises the limitations of Irish political branding: a dependence on repetition, familiarity, and low-risk aesthetics at the expense of meaningful visual communication.

A strong democracy depends on sustained, accessible dialogue between the State and its people. Visual identity is structurally embedded within this exchange. Visual languages that are familiar or culturally resonant reduce cognitive load and strengthen affective engagement, whereas generic or stylistically flattened forms tend to weaken meaning-making [7]. In this sense, campaign aesthetics function as a form of civic infrastructure, shaping perceptions of authority, intention, and legitimacy before a single word is spoken.

When design is framed as a luxury rather than an essential component of civic life, it erodes the shared visual language through which democratic communication occurs. Such an approach initiates a feedback loop. Minimal investment in design yields fewer meaningful symbolic or material expressions of public life. As these expressions diminish, the State becomes increasingly illegible to its people. Over time, the corporeal presence of the State, its visibility in the everyday, degrades. What was once a free-flowing dialogue becomes generic, flattened, and emotionally inert. Political branding therefore mirrors the State’s broader orientation toward public infrastructure. When design is treated as secondary, a dispensable aesthetic layer rather than a civic medium, its communicative and democratic potential collapses. When taken seriously, however, design becomes a point at which cultural belonging, political intent, and civic participation converge.

Ireland’s future civic health depends not on dispensing with design but on recognising it as a central component of public life. It is the medium through which the State becomes visible, legible, and trustworthy.

The views expressed in this article are the author's own.

Highly visible and emotionally charged, electoral campaigns are often the first instance in which a state’s people encounter their elected representatives. In this article, Anna Cassidy, designer for Catherine Connolly's presidential campaign, examines how political design is indispensable to the democratic process.

Read

“[W]asn't this all started by some terminally online moron in trinity? … Nobody gives a shite so long as the statue isn't actually being damaged” wrote [Deleted] on the reddit page r/Ireland in a thread to discuss Dublin City Council's proposals to stop the repeated groping of the Molly Malone statue on Suffolk Street — her breasts repeatedly touched by the sweaty hands of tourists, so much so that the dark patina has been worn away to reveal the earthy metallic dark orange of the bronze from which the mythical fishwife was cast. Thousands of images of Molly #mollymalone circulate on TikTok. A group of men dressed in Jack Chalton-era Irish football jerseys stand in line to rub their faces in her breasts. In the comments section one user posts, “reminder she’s 15 in this statue,” others disagree, claiming she was older, as if somehow the behaviour would be permissible if the statue represented Molly as 17 – the legal age for consenting sexual acts. Others use the platform to protest the behaviour.

If you ask Google’s AI Gemini about the practice, it tells you that “this practice is now discouraged by authorities for preservation reasons.” This is artificial stupidity, a view blind to a far more important problem, one that philosopher Sylvia Wynter described as an urban planning that assumes the male-coded subject as the norm, while others—women, Black, Indigenous, and colonised peoples – are excluded, marginalised, or rendered invisible [1]. For Wynter, urban space is ontologically male, in that its logics of design, governance, and belonging reproduce a gendered and racialised “Man” as the universal standard of being. Speaking to RTÉ Radio One, DCC Arts Officer Ray Yeates (a man) suggested that one solution could be to “just accept that this behaviour is something that occurs worldwide with statues” – human stupidity [2]. Perhaps Yeates might agree to a plaque being added, inscribed with a quote from Wynter: “Man …overrepresents itself as if it were the human itself”[3].

As images of the statue circulate online, they both promote and raise awareness of this deleterious practice. But this is the means and not the end of their circulation. These images turn Suffolk Street into a space for the production of a strange kind of economic exchange. With one sweaty hand on a breast, and the other on a smartphone, tourists become workers. Here, as in all of everyday life, a distinction can no longer be made between work and play. In our age of contemporary digital technology all of everyday life is a factory. To play is to work; the digital proletariat; to use a technological prosthesis is to be used by that prosthesis. These interfaces, designed for the many by and for the benefit of the few, manage life by means of ‘fun’. Spaces like Suffolk Street are, as Letizia Chiappini writes, where “[a]ffect, desire, pain, and love, are digitally mobilised for direct spatial impact” [4].

Henri Lefebvre called this abstract space – “[t]he predominance of the visual (or more precisely of the geometric-visual-spatial)” [5]. He described this kind of logic as a planetary mesh that has been thrown over all space [6]. Any space, anything, anywhere, no matter how banal is subject to this logic. 13,461 km away from the Molly Malone statue is an underpass in the Chinese city of Guilin. Each night crowds of outdoor live streamers gather to steam content on Douyin (the Chinese version of TikTok), their faces glowing in the phosphorus white of selfie lamps. Geolocation means that if they are closer to more prosperous neighbourhoods then they make more money from the wealthy clients who live there. These leftover urban spaces that are seen as unattractive and once disregarded in a capitalist economy have become spaces where new economies and ways of working emerge. I have written elsewhere about the disproportionate role that Ireland plays in facilitating the infrastructures that produce these kinds of spaces [7]. This is a new kind of geopolitics, one facilitated by State fiscal policies, such as in Ireland, home of one of lowest standard corporate tax rates in the EU.

This is capitalism incarnate – capitalism become flesh. Everything has an exchange value. There is not a thing that cannot be transformed into a commodity to be circulated in an economy of flesh, thoughts, drives and desires. This is an economy governed by images, subject to what legal scholar Antoinette Rouvroy calls algorithmic governance – the governance of “the social world that is based on the algorithmic processing of big data sets rather than on politics, law, and social norms” [8]. The statue of Molly is a public surface subject to an extractive logic, via the lens she is engineered for constant circulation, interaction, and capture. The statue as code has her meaning flattened into content for the purpose of data extraction and ad revenue. This kind of collapsing together of work and leisure is a weapon of mass distraction. It removes us from everyday life, producing what philosopher Henri Lefebvre called a “transcendental contempt for the real” [9].

Lefebvre also called for a right to the city, by which he meant the right to the production of truly democratic space. Space that is not subject to capitalist abstraction. To what extent this is even possible in our precarious age of algorithmic governance is questionable - but nonetheless we must seek to understand, hope and act.

The groping of the Molly Malone in Dublin reveals a complex new urban condition – the algorithmic production of space. Social media, viral images, new modes of capitalist production, foreground the emergence of an entirely new logic of spatial production. What does this mean for the possibility of a right to the city?

ReadWebsite by Good as Gold.